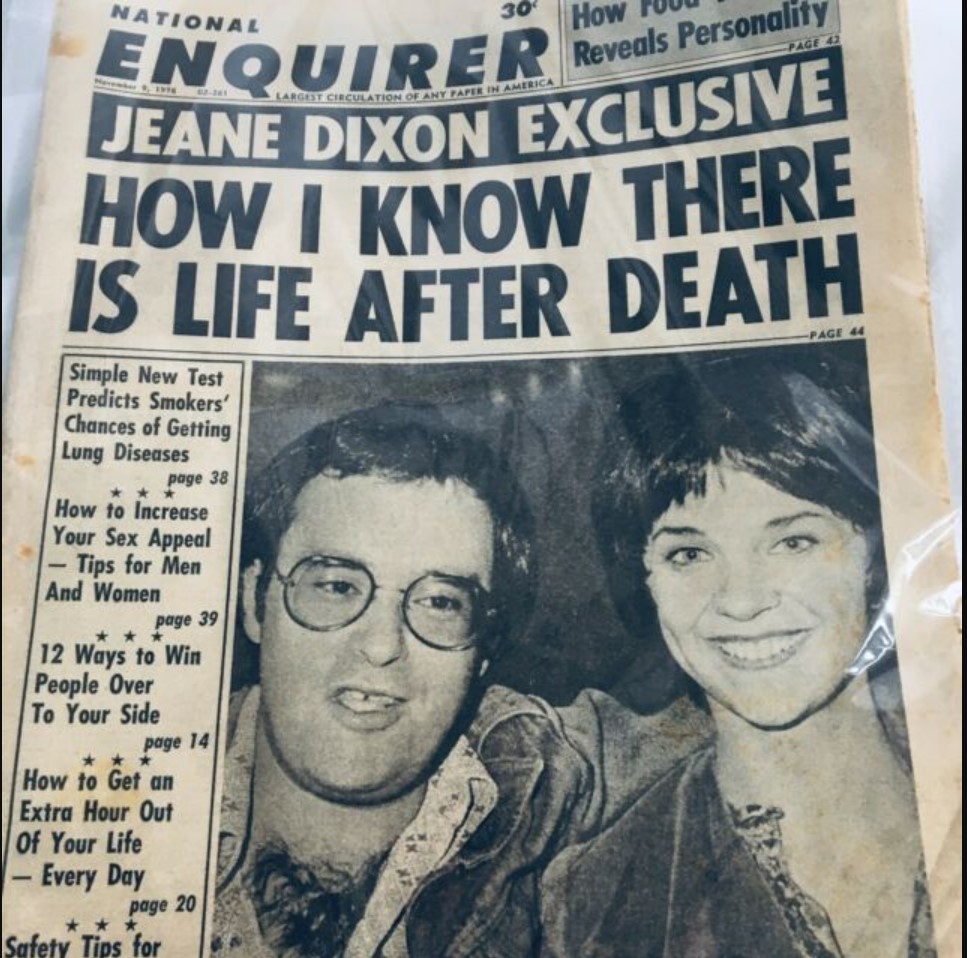

It seems like when the misinformation conversation begins, the discussion quickly turns to social media being the reason for its spread. Misinformation has been around since the invention of the printing press. Early media outlets quickly learned of the correlation between fake stories and increased circulation. The more sensational a story, the more profits were generated.

My first introduction to “fake news” during my informative years was the National Enquirer newspaper. This so-called newspaper was founded in 1926 and was initially designed to look like a traditional newspaper. However, it was far from credible and often carried conspiratorial headlines. I remember my mom telling me, “Oh, that’s not a ‘real’ newspaper.”

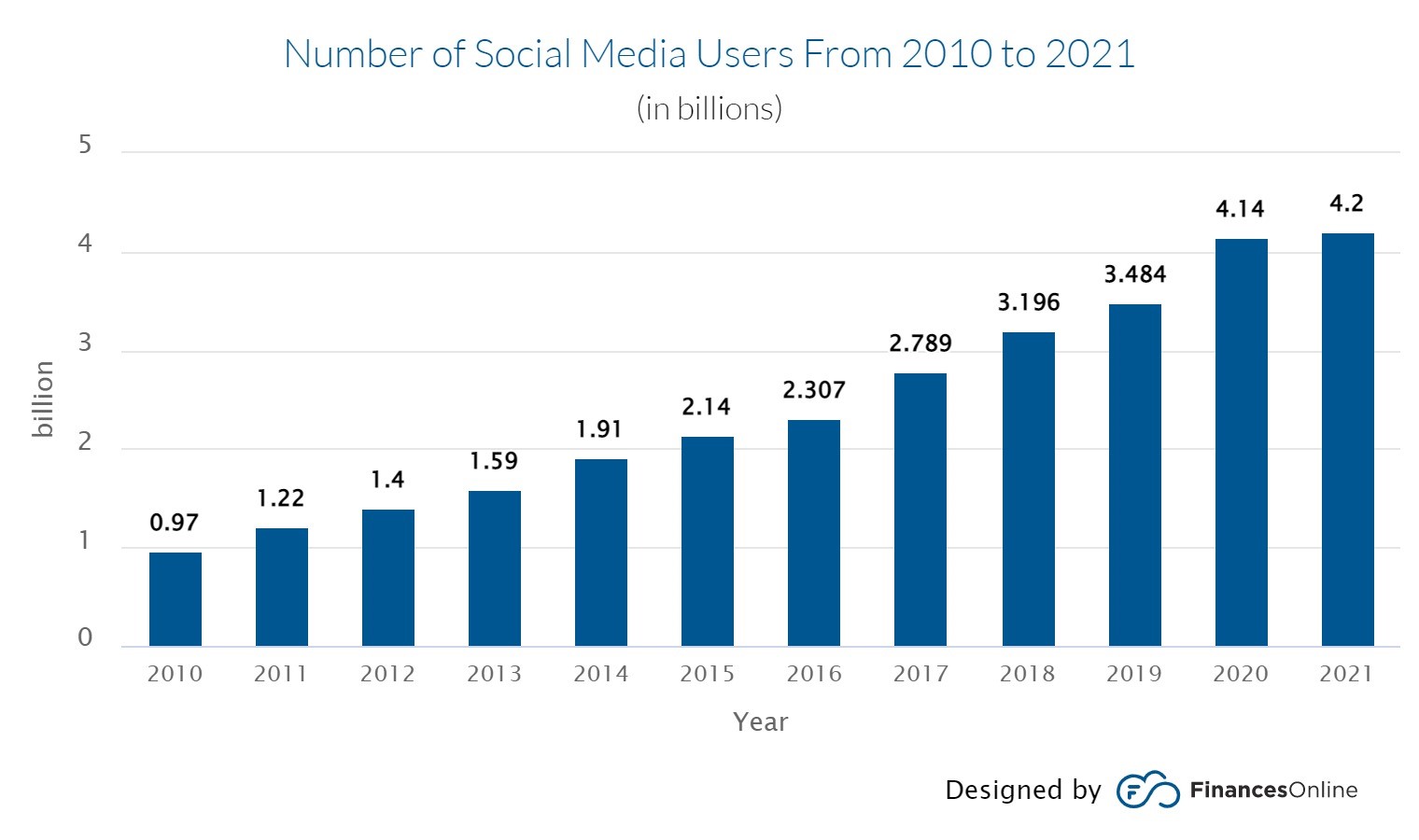

The main difference is modern media’s reach, specifically social media, has over previous media formats. In 1990, newspaper circulation saw its highest engagement rates of over 62 million copies. By 2006, the start of social media, circulation declined to around 52 million copies. In 2000, 56% of Americans got their news via local networks. By 2006, that percentage fell, and now in 2021, television news viewership dropped 38%. In contrast, all social platforms have seen continued increases in subscribership since 2010. In the first quarter of 2021, there were 4.2 billion social media users worldwide. These platforms can deliver real-time content on a massive scale faster than their predecessors.

Can Social Platforms Help Curb Misinformation?

I admit I am no longer a substantial social media user, but I try to stay abreast of the public’s desire to see social media platforms take action against misinformation. The question I have is whether they are creating systems that can prevent the spread of misinformation? If they are, what effective systems are currently in place, and are they working?

Facebook has taken a lot of heat since the onset of the COVID-19 pandemic. Including the U.S. President expressing his fear that the magnitude of misinformation on this platform could irreparably harm the public. Unfortunately, all the steps Facebook has taken do not seem to be working or are enough in the public’s eye.

Facebook’s misinformation initiatives began after the 2016 presidential election. They were excused about allowing troll accounts to spread fake news that potentially impacted the 2016 election. Facebook’s VP of News Feed confirmed in a statement the changes on the platform in 2017. The initiatives taken include:

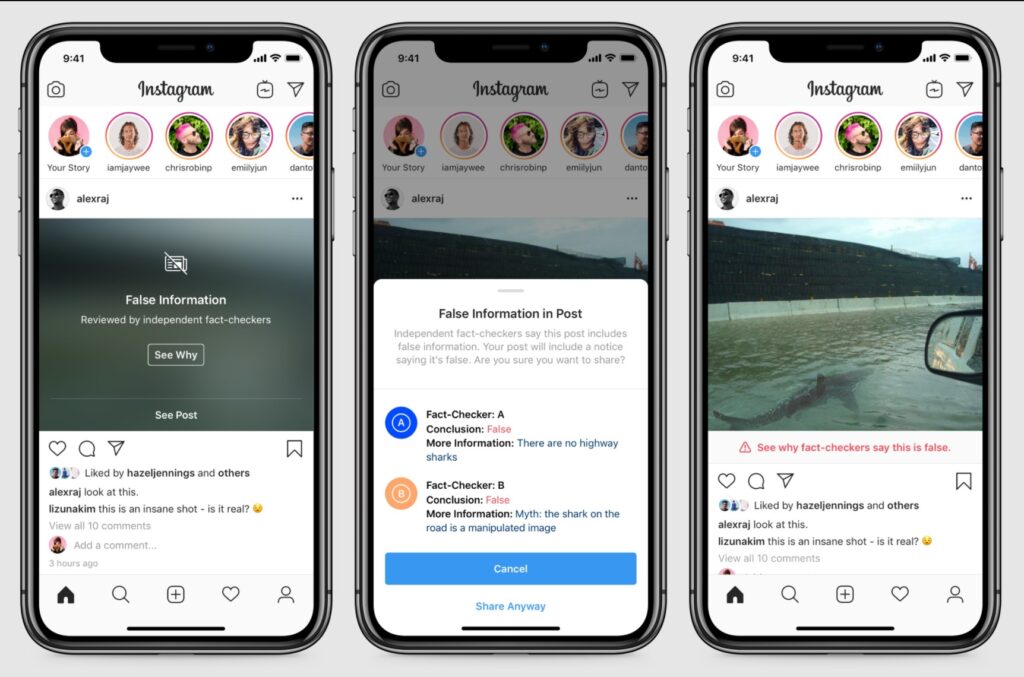

· Using a 3rd party fact-checking service to identify misinformation

· Flagging misinformation posts and suspending habitual violator accounts

· Using AI to detect and enforce community policies and standards

· Updating technology to stay ahead of nefarious activity

In the wake of Covid and vaccination misinformation, Facebook was again placed in the hot seat. They employed the tactics mentioned in the 2017 policy statement. Capitol Hill maintains its stance that Facebook is not doing enough to prevent misinformation on the platform. Facebook CEO feels differently. Their current Community Standards Policies lists false news under its integrity and authenticity section. False news is an issue Facebook takes seriously. An interesting position they have is making the distinction between false news and satire or opinion-based content. Facebook confirms they do not remove content deemed false but places it lower in a user’s news feed.

This policy seems to directly conflict with Facebook.com’s Resource Library content, specifically within their misinformation page. On this page, they list the policy changes from 2019 to 2021 that have taken place since the onset of the pandemic. Most notably, they assert they “remove content” that violates community standards. This assertion seems to contradict their Community Standards Policy that they do not remove false content, just place it lowers in a user’s feed.

Which is it Facebook? Does your algorithm place misinformation lower in the feed or remove it?

In 2020, Facebook’s CEO claimed they should not be the “arbiters of truth.” This statement seems to be more in line with the current Community Standards of Facebook. Again, I am not a significant user of Facebook, although I have noticed fewer disclaimers or fact-checking notifications in my feed since 2020/2021. While I find this currently refreshing, I am unconvinced of Facebook’s effort to curb misinformation on its platform.

What other Platforms are Doing

With the focus being on Facebook, LinkedIn has seemed to stay out of the headlines regarding the rise of misinformation on its platform. LinkedIn began documenting posts containing misinformation in the first half of 2020. They reported removing over 22,000 posts in that period, over 110,000 posts in the second half of 2020, and 147,490 posts in the first half of 2021. They highlighted their success in preventing 33 million fake account attempts with detection software.

LinkedIn’s Professional Community Policies center around safety, trustworthiness, and professionalism. Within the policies page, they direct users to their publishing guidelines where they remind users that:

· They are to remain professional, and posts should not contain information that is “misleading, fraudulent, obscene, threatening, hateful, defamatory, discriminatory, or illegal.”

· Users are responsible for the content of their articles. This includes liability if they cause harm to others or harm caused to them through their use of the platform.

· The platform can restrict, suspend, or terminate a LinkedIn account. The platform can also disable user articles that violate the User Agreement.

The User Agreement specifies that users agree to provide truthful account information and refrain from posting content that has legal ramifications. LinkedIn’s Community Standards requests its members to refrain from posting content deemed misinformation or disinformation. This includes deepfake images and videos that are designed to manipulate.

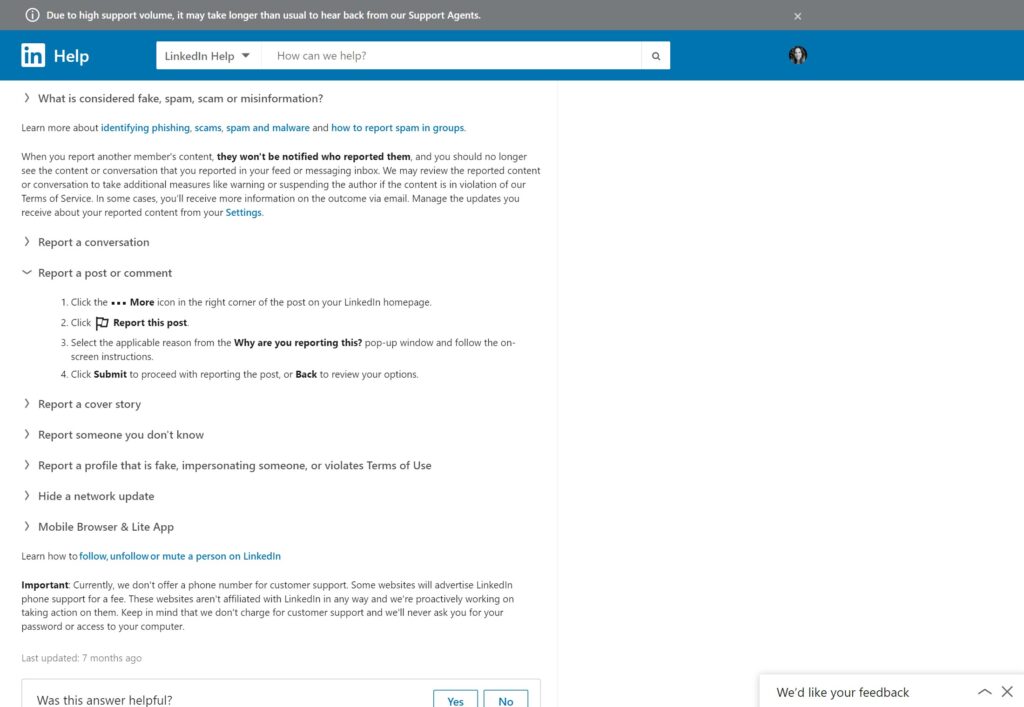

LinkedIn manages misinformation by calling on its users to report any post seen as unsafe, untrustworthy, or unprofessional. Their help page lists the various ways to report content, but nowhere does it list content deemed misinformation. Thankfully they offer misinformation as an option when a user wants to report a post.

My concern with LinkedIn’s approach is users being tasked with the reporting process and the potential for a user to report a competitor to get them kicked off the site. I also worry about how long the review process takes. How long does a post take to get taken down if a post is reported? In the meantime, the post can be viewed and shared thousands of times.

A Creative Solution

In business, I have always been solution-oriented. It is easy to critique another’s business operations, but it begs questioning how to fix it? I do not believe the “infodemic” should be solely placed on social media platforms to solve. Nor do I feel their words align with their actions. I feel this to be a public enlightenment process and educational endeavor.

Over the years, government organizations have used public service announcements to inform the public of various issues. My solution to the misinformation challenge is to create public service education materials that educate Americans on:

· What is misinformation?

· How misinformation hurts democracy.

· Who creates misinformation?

· Why are people susceptible to misinformation?

· How to spot misinformation?

· Ways to report misinformation.

· Why it is important to research information.

By informing the public on misinformation, rather than relying on Big Tech companies to do it, we may reach the desired audience and make a difference. Some people are skeptical of Big Tech and are concerned about censoring dissenting voices. I am not suggesting social media platforms should take a hands-off approach. Quite the contrary. They should be an integral part of the equation in preventing the spread of misinformation. My favorite tactic most platforms employ is fact-checking notifications. This notification attached to user posts helps other viewers evaluate the post’s credibility.

This social media tactic, together with PSAs, could help the public understand the severity misinformation poses on our democracy and deter people from sharing questionable content.

Do you have suggestions that could prevent the spread of misinformation? Please share your thoughts and ideas in the comments section below. I would enjoy extending the conversation. Thank you for being a part of the Visionary Blog concersation.

2 thoughts on “Social Platforms: Fall Guy or Problem Child”